”EthoLoop”, from notebook and binoculars to deep-learning based behavioral analysis

Accurate tracking and analysis of animal behavior are crucial for modern systems neuroscience.

However, following freely moving animals in naturalistic, three dimensional or nocturnal environments remains a major challenge.

EthoLoop, a framework for studying the neuroethology of freely roaming animals solves the presented problem.

Combining real-time optical tracking and behavioral analysis with remote-controlled stimulus-reward boxes, it allows direct interactions with animals in their habitat.

To achieve this, XIMEA cameras are installed in the three dimensional tracking system and in the close-up system.

For image processing help, these cameras are connected to a single embedded AI computing device - NVIDIA Jetson TX2.

Introduction

Ethology, the detailed analysis of animal behavior, provides important clues about the evolution and function of the underlying brain circuits.

Tracking behaviors in naturalistic environments thus remains a key part of modern neuroscience.

Nevertheless, for many conditions, a simple notebook and binoculars might not be sufficient nor efficient.

Quantitative behavioral analysis is particularly challenging when animals move fast or in complete darkness.

To obtain accurate and reproducible behavioral data from freely roaming animals, Ali Nouri Zonoz, a PhD student in the laboratory of Prof. Daniel Huber at the University of Geneva, developed a novel type of 3D tracking system termed “EthoLoop”.

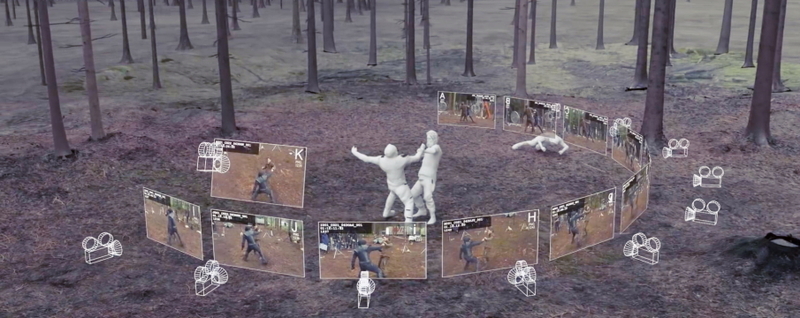

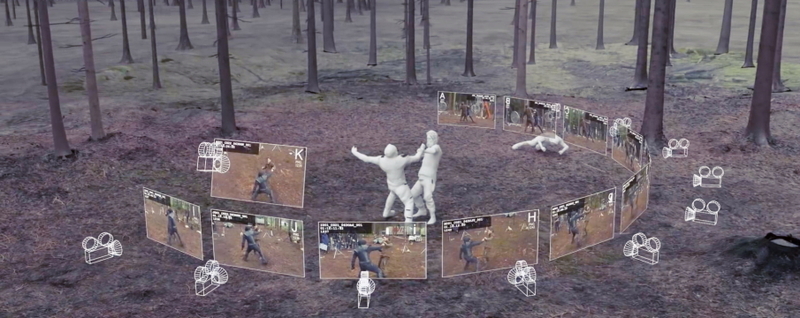

EthoLoop uses real-time, optical tracking to localize the animals in three dimensions and sends the tracked position to automated gimbal-mounted cameras, thus providing continuous close-up views of the tracked individuals.

EthoLoop is designed to follow tiny, portable infrared markers carried as a necklace by the animals.

This enables tracking and identification in complete darkness.

To achieve this successfully at high speeds with minimum latency, multiple high speed XIMEA cameras (MQ013RG-ON) are installed in the tracking arena.

There each camera is connected to an independent embedded computing device (NVIDIA Jetson) for image processing purposes.

Automatized animal training

Using the latest computer gaming hardware and machine learning methods, the close-up images are analyzed in real-time.

Thus, providing the possibility of delivering behavior-triggered feedback or rewards to the animals.

Using wirelessly connected reward boxes distributed in the environment, they were able to show that the EthoLoop system is sufficiently fast and accurate to condition and reinforce specific behaviors in freely moving primates and rodents, thereby basically replacing professional animal trainers.

The researchers were further able to show that the stimulation of the brain’s reward centers was sufficient to induce conditioning.

These results are published in October’s edition of Nature Methods.

The third dimension

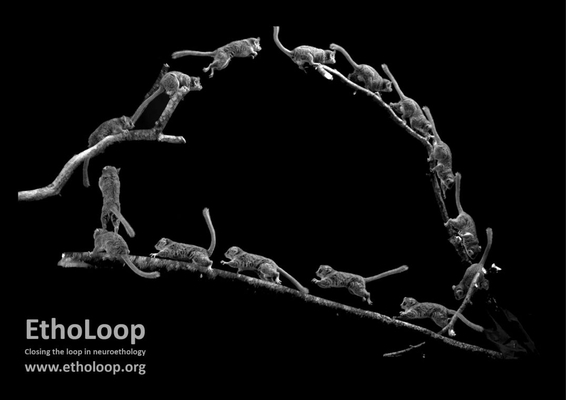

Finally, the EthoLoop system was used to address a fundamental question in neuroscience.

How is the third dimension represented in primates' brains freely climbing in trees?

Through the recording of the neuronal activity in a brain region related to navigation and memory, termed the hippocampus, the researchers were able to identify different cells that were active when the animal was passing a specific location or moving in a specific direction.

For the complete study research, please read the full article here: https://www.nature.com/articles/s41592-020-0961-2

The full documentation of the system can be found at: www.etholoop.org.

Specifications

Real-time tracking in three dimensional environment

To track animals in large scale three-dimensional environments, EthoLoop uses multiple MQ013RG-ON cameras from XIMEA.

Thanks to the small size (26x26x24 mm) and the ability to reach 1000+ fps at 640x400 resolution, the cameras are a perfect solution for this online tracking system.

To extract the two-dimensional information from each of the different viewpoints, the GPU-based microprocessors NVIDIA Jetson TX2 are used.

They also wirelessly transmit the data for 3D reconstruction to a central host machine that is running Linux.

This makes it possible to follow individuals at high speeds of around 800 Hz with latency below 10ms.

For more information regarding the assembly of EthoLoop tracking system please visit http://etholoop.org/Tracking.html.

Close-up system

The real-time 3D coordinates are used to control a custom-made close-up system that captures continuous magnified images from the tracked animal.

This system is built using a gimbal powered by two smart servos (Dynamixel) where MQ013RG-E2 camera is placed at the intersection of their rotation axis.

The high sensitivity of XIMEA camera at Near infrared wavelength (above 700nm) enables frame acquisition in dark environments at low exposures, below 10ms.

This results in a high sampling rate and sharp images of fast moving animals.

Furthermore, the gimbal camera is equipped with a liquid lens autofocus system from Optotune, which enables ultrafast focusing on the tracked position in space.

The assembly of the close-up system can be found at: http://etholoop.org/Closeup.html

Remote controlled box

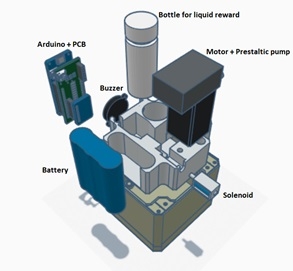

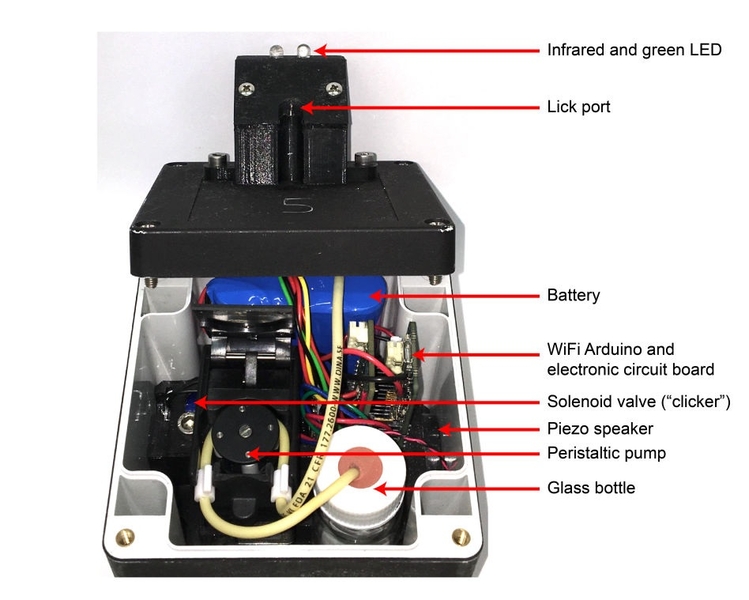

EthoLoop controls multiple battery powered remote controlled boxes called RECO-Box.

These boxes enable direct interactions with tracked animals by providing different modality stimuli and liquid rewards.

The RECO-Box is developed using a wirelessly controlled microprocessor - Arduino MKR 1000.

Assembly and 3D printable parts can be found at: http://etholoop.org/RecBox.html

Conclusion

Scientific research goes hand in hand with innovative technology.

This is what researchers from the laboratory of Prof. Daniel Huber at the Department of Basic Neuroscience, University of Geneva, have been applying in EthoLoop.

Overall, EthoLoop provides a novel type of closed-loop behavioral analysis system able to track, analyse and train freely moving animals.

Such an active interaction with the tracked subjects potentially saves countless hours of tedious and tiring animal observations and training by human observers.

In addition, thanks to the installed XIMEA cameras, it provides views of behaving animals with unprecedented quality and precision.

EthoLoop picture gallery: https://etholoop.org/Gallery.html

Contacts:

HUBERLAB at Geneva University

Département des neurosciences fondamentales

Université de Genève

CMU, Rue Michel Servet 1

CH-1211 Genève 4

Phone: +41(0)22 379 53 47

Fax: +41(0)22 379 54 02

For all inquiries please contact: [email protected]

Related articles

Case study: Aurox Unity - laser free confocal microscope

Case study: UltraCam Osprey 4.1 – New Perspective on 3D Areal Mapping

Latest articles

XIMEA at Embedded World 2026

Visit the XIMEA booth 2-542 from 10-12 March to experience integrated embedded vision.

XIMEA at GEO WEEK 2026 in Denver

Visit XIMEA at booth #1704, 16-18 February to experience high-performance cameras optimized for aerial, mapping, and geospatial applications.

Join us at Photonics West 2026

Accelerate edge intelligence with XIMEA’s ultra-low latency PCIe cameras. Stop by for live demos and tech deep-dives with our team at Booth #3231

Meet the XIMEA team at ITC in Las Vegas

Visit us at booth #1026

XIMEA at INTERGEO 2025 in Frankfurt

Visit XIMEA at booth #0A095 to get hands-on with cutting-edge camera tech built for flexible payloads, mapping, and more.